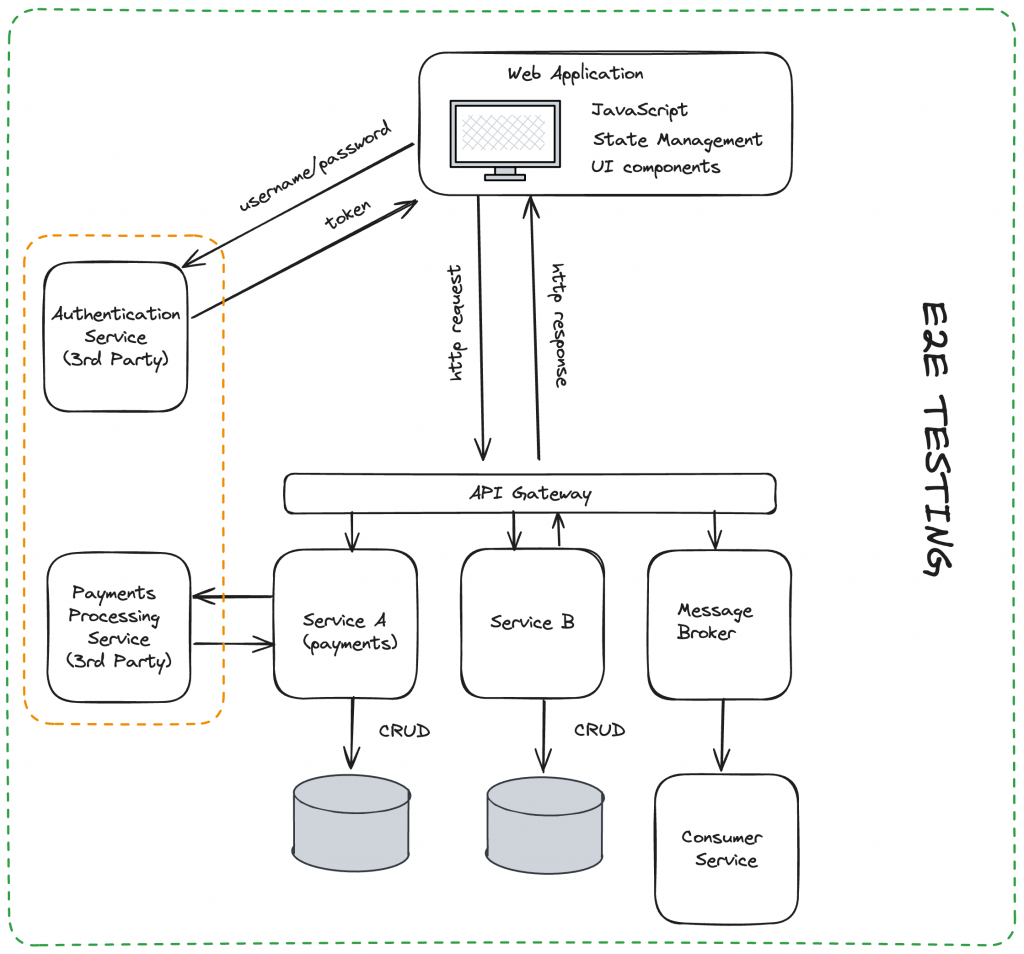

When building an E2E testing solution it is important to understand how the application works not only from UI (black box) perspective but also how backend services interact one with another, how many third-party services involved in the application, is there any message brokers, and so on. In this blog post let’s look at what it takes to design a proper E2E testing solution

Application Under Test (UAT)

Before we dive into Cypress E2E testing setup, let’s look at the basic web application design

As you can see even basic applications might have things like:

- frontend with complex javascript, ui components, and state management

- backend services that communicate with databases through different network layers

- third-party integrations

- api gateway that exposes public endpoints, but we also have to account for private endpoints, it could be test helpers or something that can only be consumed within our network

- message brokers that take time to execute and so on

All of this needs to be taken into consideration when designing our test automation solution.

Test Framework Setup

Now that we have a basic view of our application let’s take a look at how a typical Cypress E2E testing setup looks like

Test Data Management

Now let’s take a closer look at test data management, I prefer to separate it into two parts:

- Test Data Management, there are different ways to seed data, which include DB snapshot, Seeding with API, or a mix of both

- Test Users Management, you can have a pool of static users, dynamically generated users, or both. As an example, you might have a pool of static users with subscriptions, every time you run tests you want to check if the subscription is still valid, and if it is not re-subscribe the user or create a new one with the same subscription.

Continues Integration (CI) Pipeline

When running E2E tests in CI it is good practice to divide tests into different levels (similar to how Microsoft explains it in their blog post). I typically go with 3-4 levels

L0 – running on pre-commit (rare when you see it for e2e testing)

L1 – most critical tests, running on PR open and when new commit pushed to work PR

L2 – critical tests, running on PR Approval, before PR merged

L3 – rest of the tests, if missing bug it will be not a high severity. Running nightly or staging environment on main(release branch)

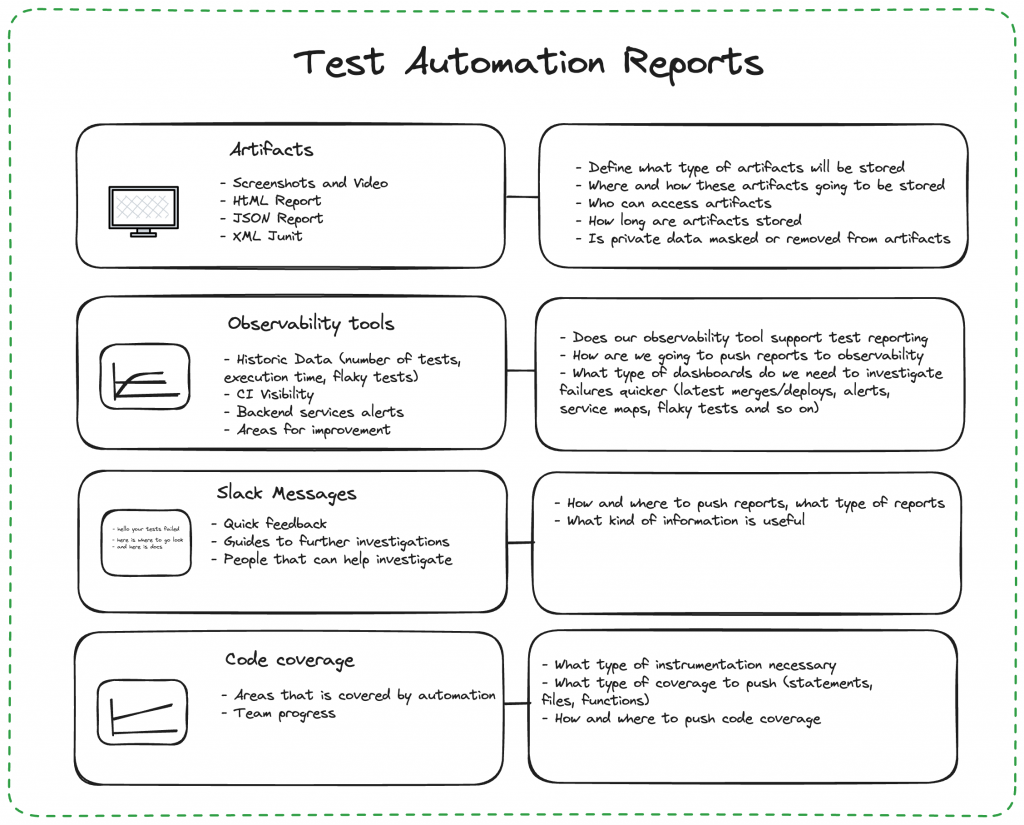

Test Reports

Another important aspect of the test automation framework to think of is test reports