There’s a lot of talk about AI replacing SQE and SDETs. Here’s why AI falls short when it comes to testing:

1. AI Can’t Architect Test Automation Frameworks

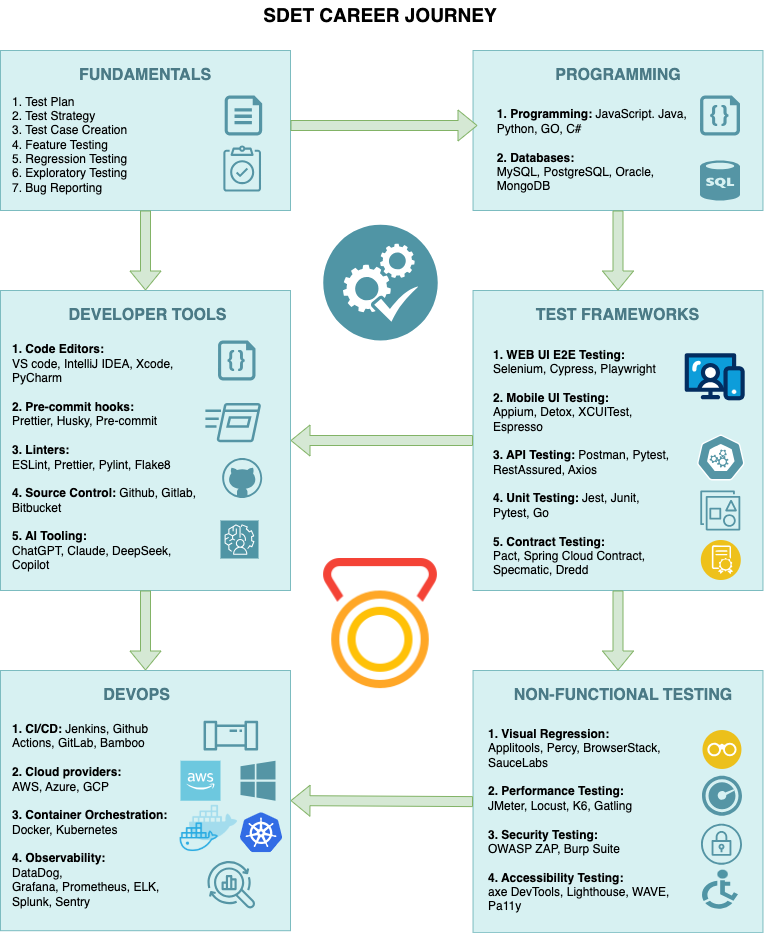

AI can generate test cases, but who decides what gets tested and how? Test automation isn’t just about writing test scripts—it requires building scalable, maintainable, and efficient frameworks that integrate with CI/CD, monitoring, and reporting systems. SDETs design these frameworks, ensuring they are reliable and adaptable over time.

2. AI Can’t Enforce Quality Standards in CI/CD

Modern software development relies on fast feedback loops and continuous testing. SDETs set up linting, pre-commit hooks, code coverage checks, and quality gates to prevent bad code from reaching production. AI may assist in running tests, but it doesn’t define or enforce these best practices across teams.

3. AI Can’t Optimize Test Infrastructure

Running tests efficiently at scale requires containerization (Docker), orchestration (Kubernetes), and test parallelization. SDETs configure test execution environments, mock services, and infrastructure as code (IaC) to improve performance. AI can’t reason about infrastructure costs, stability, or optimization—that’s an engineering decision.

4. AI Can’t Interpret Observability & Debug Failures

Even if AI detects failures, who fixes them? Modern test automation requires logging, distributed tracing, and performance monitoring to pinpoint root causes. SDETs analyze failures across Grafana, Prometheus, Jaeger, and Sentry—AI lacks the deep understanding to debug complex system interactions.

5. AI Can’t Define Non-Functional Testing Strategies

Testing isn’t just about verifying functionality. SDETs handle performance, security, and visual regression testing, ensuring software is fast, secure, and visually correct. AI can assist in running load tests (e.g., Locust, k6) or security scans (e.g., OWASP ZAP), but it doesn’t define the strategy, analyze results, or ensure compliance.

6. AI Itself Needs Testing & Validation

AI models are not perfect—they introduce biases, false positives, and incorrect assumptions. Ironically, AI-generated test cases need human validation to ensure accuracy and relevance. SDETs test AI itself by validating models, analyzing decision-making patterns, and preventing false test failures.

AI is a powerful tool that enhances testing but doesn’t replace SDETs. The real value of SDETs isn’t just writing automation—it’s in architecting test frameworks, optimizing test infrastructure, enforcing CI/CD quality gates, debugging failures, and ensuring software reliability.

Instead of fearing AI, SDETs should embrace it as a tool—leveraging it to automate repetitive tasks, improve efficiency, and free up time for more strategic testing efforts. The future of software quality isn’t AI versus SDETs—it’s AI + SDETs working together.