I recently presented at the Chicago QA Association, where I talked about changing the quality culture in the organization. As a company progresses through various stages, it’s crucial to ensure that its quality culture evolves to meet the changing demands of the business. In this blog post, I’ll look into the strategies for implementing a “shift left” approach and expediting quality processes to support the growth of the company. Specifically, we going to look at 3 key points:

- Strategic Adaptability

- Navigating Resistance

- Scalability Strategies

Strategic Adaptability

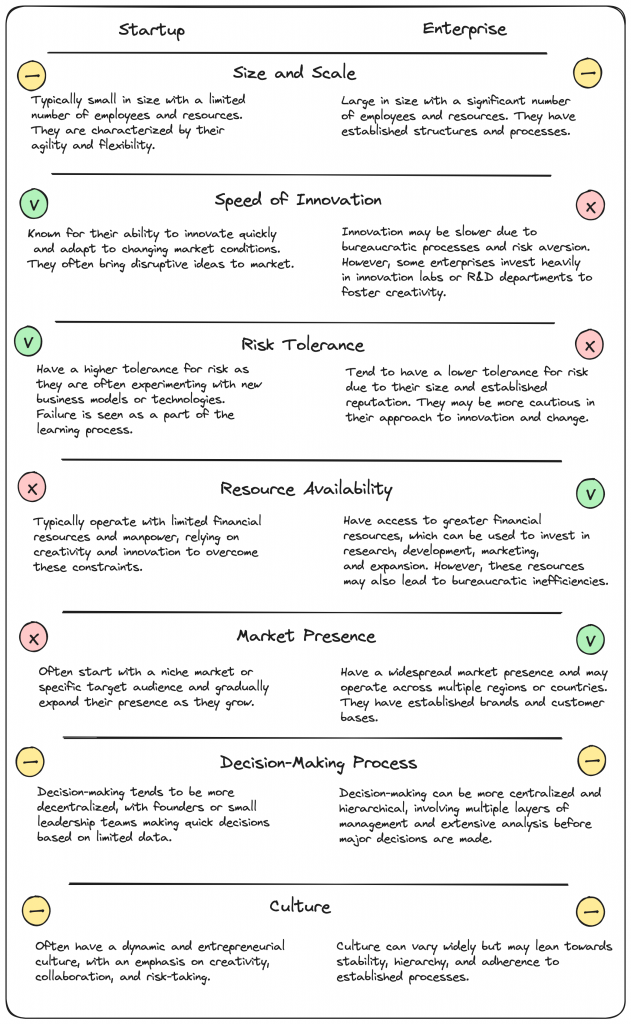

Strategic Adaptability is an important aspect of shifting left mindset, before we work on it, let’s review the key differences between Startups and Enterprise.

From the image above we can see startups winning in terms of Speed of Innovation and Risk Tolerance, but lacking Resource Availability and Market Presence. When the company is going from one stage to another this is the perfect time to make the change and set the path forward for many more years ahead of the successful company.

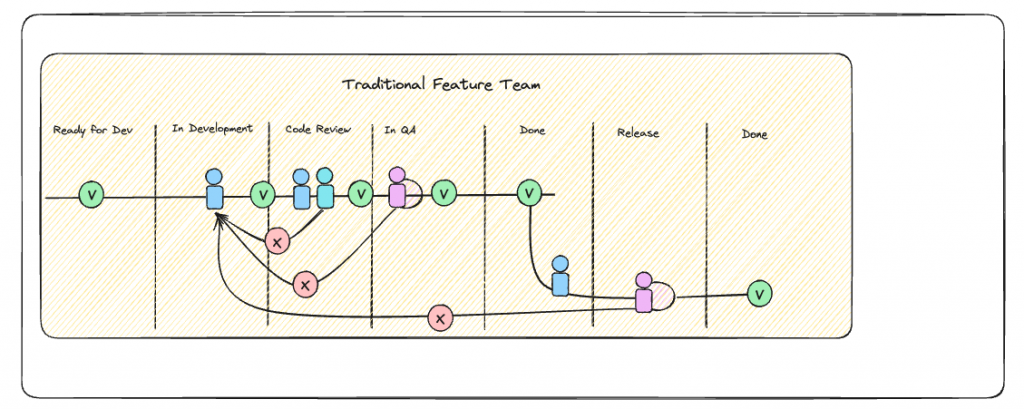

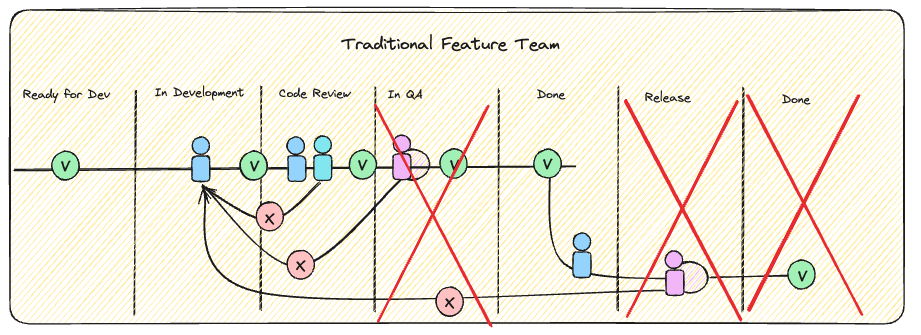

Let’s look at a traditional Feature Team operating in two weeks sprint. Here we not going to talk about sprint ceremonies, but instead, focus on the feature lifecycle.

As you can see in the image above there are lots of things happening. If we zoom on the testing stage, we can see that the QA engineer spends some time on:

– Learning new feature

– Testing it manually

– Writing some automation (In my experience this typically gets avoided since more tasks coming in)

If QA Engineers find an issue, they move the story back to development. By that time, the developer may have already started working on another feature. Fixing the issue will then require the developer to either switch contexts or put the new feature on hold until the issue is resolved.

Another observation I’ve made is that developers often hold off on moving features down the chain until the end of the sprint. This allows them to address any issues that arise all at once, rather than dealing with them individually as they come up.

You might think that’s the end of it, but it’s not. Typically, a release branch undergoes regression tests and exploratory testing by QA Engineers. If an issue is discovered during this testing phase, it goes back to the beginning of the cycle and has to pass through all the stages again.

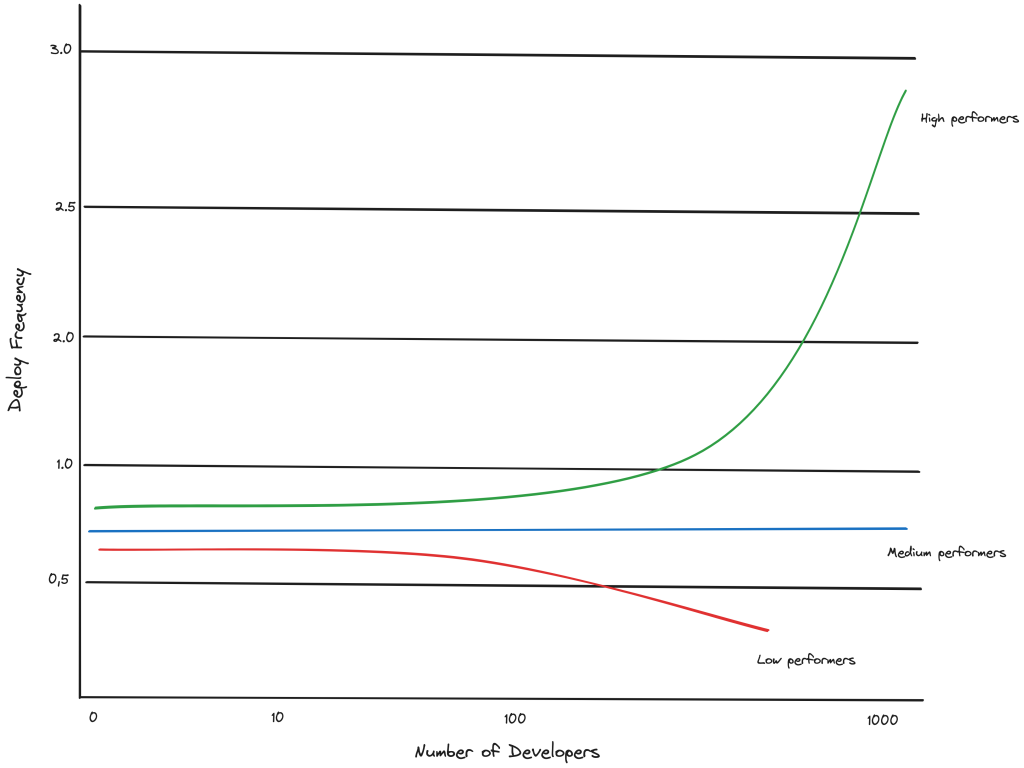

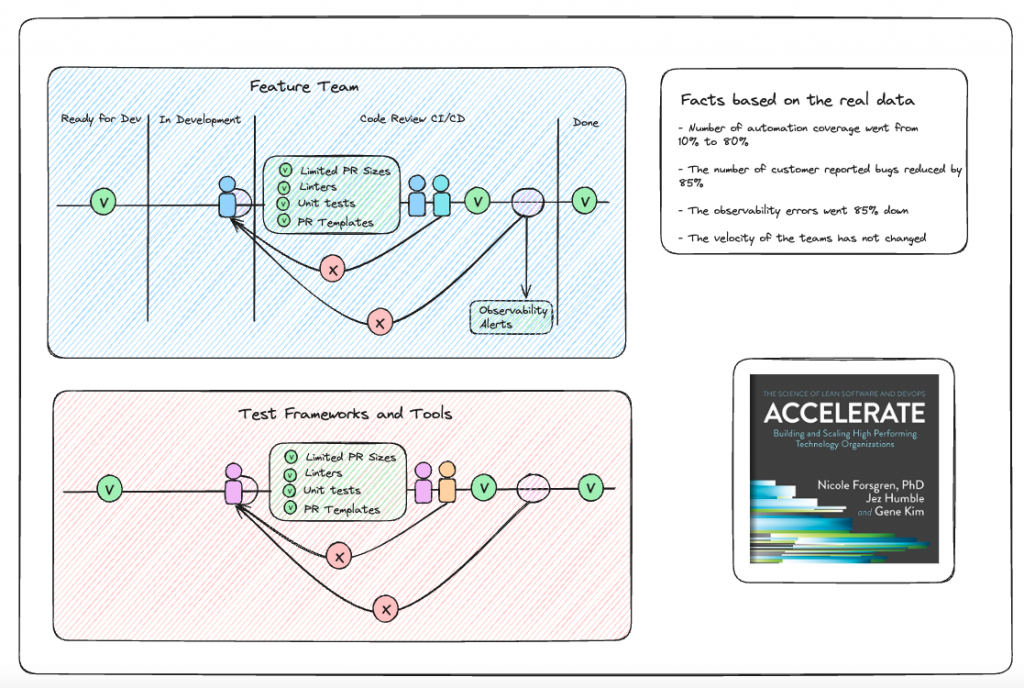

In the book, Accelerate authors researched high-performing teams deploy more often than low-performing teams. It makes a significant impact as the company grows.

So can we save some time by shortening a cycle? And what happens if we remove QA from the feature development cycle? Then we would have to do a shift left coupling it with trunk-based development.

The image below illustrates our proposed improvements:

- Shift E2E Testing Left: Move end-to-end testing responsibilities to developers.

- Add Automation Checks: Implement additional automation such as limiting PR sizes, using linters, and utilizing PR templates.

- Trigger E2E Regression Testing: Automatically initiate end-to-end regression tests whenever a feature is ready to merge, upon PR approval or addition to the merge queue. If all tests pass, the feature can be merged.

- Integrate with Observability Tools: Couple our E2E testing with observability tools like DataDog, ELK, and Splunk to receive alerts if issues arise in the UI layer or backend services.

- Ensure DoD Compliance: Developers must ensure that the feature meets the DoD agreement (definition of done)

And if all checks are green we can safely merge and deploy our changes.

That’s great, but what about the QA Engineers? We can form a Test Frameworks and Tools team. This team will be responsible for supporting test infrastructure, managing test data, ensuring test stability, and improving execution times. Additionally, they will focus on enhancing the developer experience by regularly polling developers to identify pain points and areas for improvement.

Navigating Resistance

When the team attempts to implement a shift-left approach, they often face resistance from various sides. Here are some common arguments:

Developers:

- “If I wanted to do testing, I would have applied for a tester job.”

- “I’m not a testing expert.”

- “E2E testing is flaky.”

- “E2E testing is slow.”

Engineering Managers:

- Concern about developers being stressed.

- Worry that velocity will decrease.

- Lack of trust in automation.

QA Engineers:

- Fear of losing their jobs.

- Limited trust in the automation they are creating.

Technical Challenges:

- Stability of environments.

- Testability of the product.

- Dependencies on third-party services.

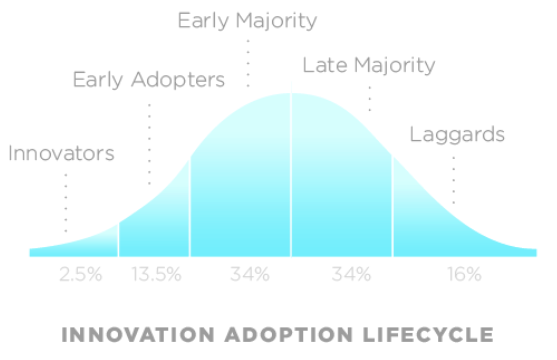

So what to do and how to overcome resistance. You most likely heard about the innovation adoption lifecycle

Looking at the graph above, we can see that there will always be some open-minded individuals willing to give it a try. These people are your locomotives. Make sure to publicly recognize their efforts and celebrate their successes. Here are some other important key factors to help navigate resistance:

Buy-In from Upper Management Securing buy-in from upper management is crucial. While they can be a tough crowd to please, presenting robust data that demonstrates the benefits of the shift-left approach can earn their support.

Show the Big Picture and Be Transparent people are more likely to engage if they understand the vision and the overall goals. Communicate the big picture clearly and transparently, ensuring everyone sees the direction and purpose.

Lead by Example as a technical leader and expert, it’s essential to practice what you preach. Lead by example and don’t be afraid to make mistakes. Demonstrating your commitment and willingness to learn will inspire others to follow suit.

Present in Guilds and Teams take advantage of tech guilds (Testing, Front-End, Back-End, Mobile) to present your vision and strategy. If guild presentations aren’t sufficient, schedule individual sessions with each team to ensure comprehensive understanding and buy-in.

Write Clear Guides and Documentation develop clear guides and documentation to support the new approach. This will save time and effort, especially for new hires, by providing them with the resources they need to understand and adopt the new test framework.

Back Up Directions with Data track the initial state and ongoing progress to ensure the system is delivering as promised. Monitoring data helps validate the approach and allows for timely adjustments if the system goes off track.

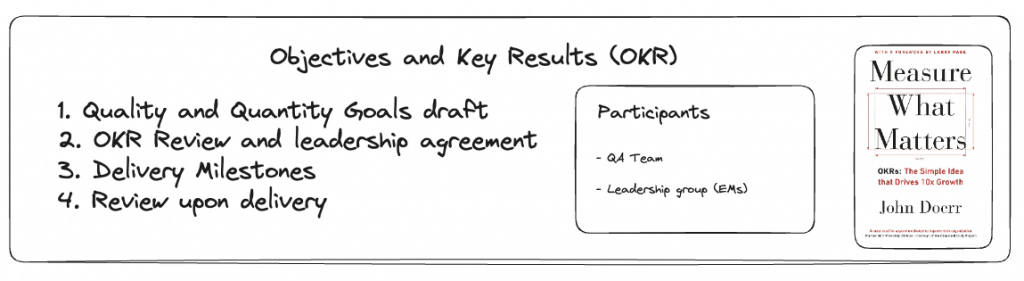

One of the mechanisms I prefer to use is creating quarterly OKRs

Scalability Strategies

The final topic in today’s blog post is scalability strategies. Scalability is a critical component of a strong quality culture because building something that can’t scale leads to frustration and inefficiency. Let’s answer some questions before we develop a strategy:

Are we enhancing the testability of the application by addressing technical debt? It’s essential to deeply understand the system under test, identifying what can be done in the current state and what improvements are desired. For instance, if unpredictable third-party dependencies cause flaky tests, several strategies can be considered.

- Mocking Third-Party Dependencies: One approach is to mock the behavior of third-party dependencies, assuming that the provider is responsible for testing their functionality.

- Implementing Health Checks: Another option is to establish health checks to verify that integrations with third-party services are functioning properly.

- Utilizing Test Modes: Using test modes for third-party applications can provide controlled environments for testing their functionality.

Is speed a priority? Should we establish Service Level Agreements (SLAs) for automation test execution? Speed is indeed crucial. If developers face long wait times to merge their changes due to slow test execution, it can hinder productivity. Therefore, ensuring that end-to-end tests run swiftly and can be executed in parallel is imperative.

Should our test automation framework be comprehensive and user-friendly (utilizing modular design and centralized reports)? It depends. While comprehensive and easy-to-use frameworks are ideal, it’s essential to align with the preferences and expertise of the development team. Modular design patterns, such as those found in modern web frameworks like React and Vue.js, can simplify maintenance and scalability.

Should the test automation framework be integrated closely with developers or treated as a stand-alone project? Generally, integration with the development team yields better results. Minimizing context switching and fostering collaboration between developers and testers can enhance efficiency and alignment with business objectives.

How do we measure Developer Experience (DX)? Monitoring DX and adjusting based on feedback is critical for the success of a test automation framework. Regular assessments, surveys, and feedback mechanisms can provide insights into developer satisfaction and areas for improvement, ensuring that the framework meets the needs of its users effectively.

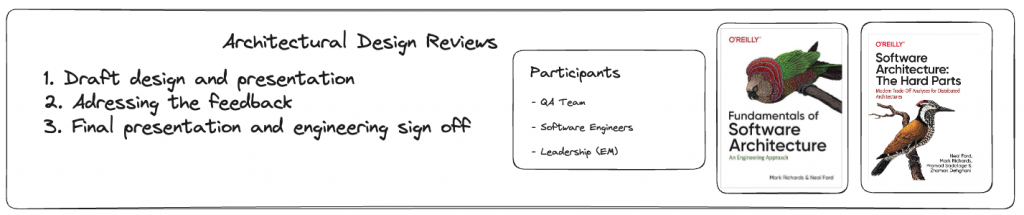

Lets take a look at how we can enhance scalability strategy. The first thing that comes to mind is participating in Architectural Design Reviews (ADRs), it can be highly beneficial. ADRs provide a structured framework for discussing and evaluating architectural decisions, ensuring that scalability concerns are addressed early in the development process. Here’s how ADRs can contribute to improving scalability strategy:

- Early Identification of Scalability Challenges: ADRs allow teams to identify potential scalability challenges during the design phase of a project. By reviewing architectural decisions, teams can proactively address scalability concerns before they become critical issues.

- Collaborative Decision-Making: ADRs involve key stakeholders from various teams, including developers, architects, and product managers. This collaborative approach fosters alignment and ensures that scalability considerations are integrated into the overall design process.

- Risk Mitigation: By discussing architectural decisions in a structured manner, ADRs help teams identify and mitigate risks associated with scalability. This proactive approach reduces the likelihood of scalability-related issues arising later in the development lifecycle.

- Knowledge Sharing: ADRs provide an opportunity for knowledge sharing and cross-team collaboration. By participating in ADRs, team members gain insights into the scalability challenges and solutions being considered across the organization.

- Continuous Improvement: ADRs promote a culture of continuous improvement by encouraging teams to reflect on past decisions and learn from their experiences. By regularly reviewing and updating architectural decisions, teams can adapt their scalability strategy to evolving requirements and technologies.

In summary, participating in Architectural Design Reviews (ADRs) can significantly contribute to improving scalability strategy by facilitating early identification of challenges, fostering collaborative decision-making, mitigating risks, promoting knowledge sharing, and supporting continuous improvement efforts.

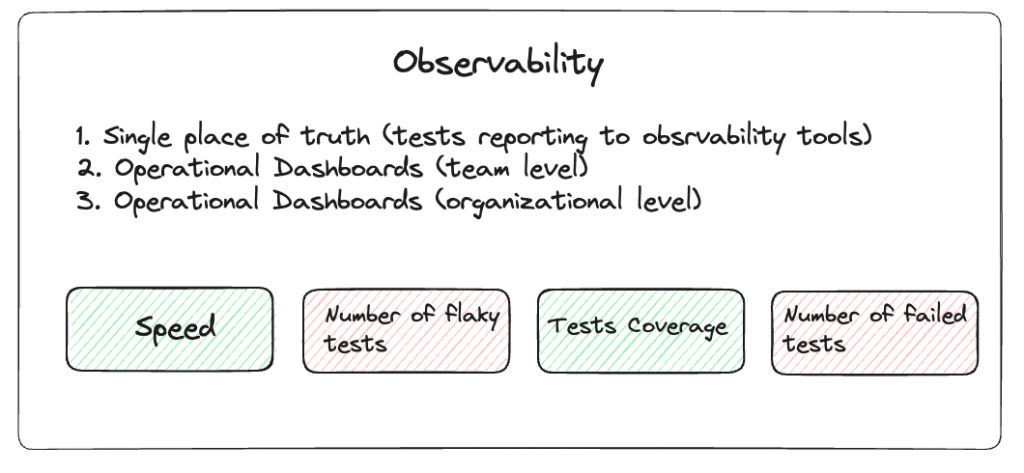

Another critical aspect of maintaining and improving a test framework is observability. A well-designed dashboard provides valuable insights into the state of the framework, enabling teams to adjust and make improvements as needed. Here’s how to approach dashboard design based on the intended audience:

- Management Dashboard:

- Focus on high-level metrics and key performance indicators (KPIs) that provide an overview of the test framework’s health.

- Highlight metrics such as test execution trends, overall test coverage, and stability indicators.

- Present information in a visually appealing and easy-to-understand format, allowing management to quickly assess the framework’s status without looking into technical details.

- Team Dashboard:

- Provide more detailed metrics and data relevant to the team maintaining and improving the framework.

- Include information on test failures, flakiness trends, test execution times, and resource utilization.

- Incorporate additional features such as drill-down capabilities and trend analysis tools to support troubleshooting and optimization efforts.

- Customize the dashboard based on the team’s specific needs and priorities, ensuring that it provides actionable insights for driving continuous improvement.

By tailoring dashboard design to the intended audience, teams can effectively communicate the state of the test framework and empower stakeholders to make informed decisions and drive improvements.

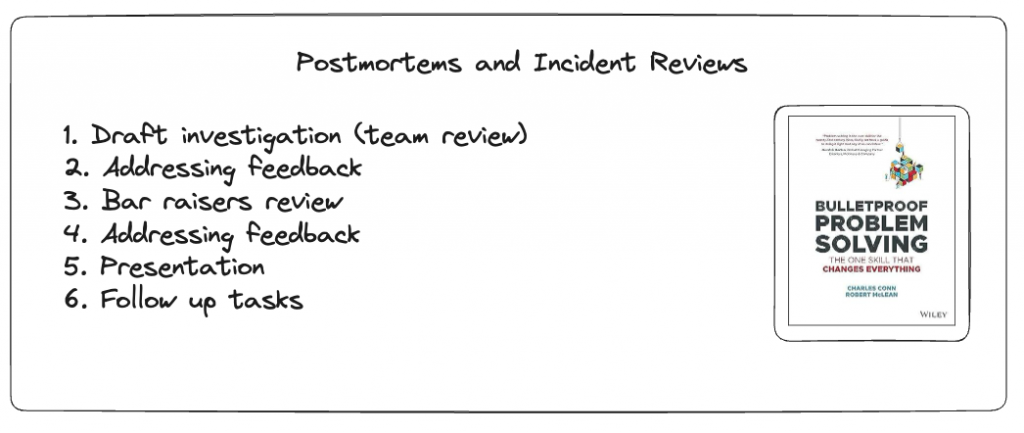

Last but not least Postmortems and Incident Reviews play a crucial role in ensuring the reliability and effectiveness of a test automation framework. While postmortems are commonly associated with high-severity incidents in production environments, they are equally valuable in the context of test automation. The goal of the framework is to identify and prevent issues before they reach production, but various factors can impact its stability, such as flaky tests, infrastructure limitations, and data dependencies.

Recognizing and addressing these issues is essential for building trust in the test automation framework among engineering teams. Postmortem reviews provide a structured approach to analyzing incidents and developing strategies for improvement

In summary, while the shift-left approach is valuable for improving quality culture, it’s not the sole strategy to consider. Various approaches should be tailored to meet specific business needs, understanding that no one-size-fits-all solution exists. However, shift-left is a promising pattern that can enhance both quality and speed of delivery to market while reducing operational costs. Its focus on early testing and collaboration aligns well with modern software development practices, making it a compelling option for organizations looking to optimize their quality processes.