One of the biggest challenges in E2E testing is to overcome flaky tests. In this blog post, I will share a few approaches that I use to reduce flakiness in Cypress (the test framework I’m using for testing), but before I do that let’s figure out what is a flaky test and what are the most common reasons for tests to be flaky.

Flaky tests are tests that exhibit both a passing and failing status across multiple test runs for the same commit. Typically when you execute those tests manually you almost never see the issue and it only happens when you run the automation script.

Symptoms

As every issue flaky tests has a root cause, it is important to understand what are those root causes, so you know what you are dealing with. Here are the most common reasons for tests to be flaky:

- Page load issue

- Loading animation or masks

- Event triggers

- Poor locators

- Data dependency

- Feature flags

- Slow network

Knowing those symptoms will help you identify the problem and how to address it, but before you start doing it you might want to put the tests in quarantine.

Quarantine

So what happens when you discover a flaky test in your suite, well you either fix it right away, which is almost never the case, or you place it in quarantine. Quarantine does not necessarily mean you need to fix the tests, sometimes it means that the condition that is causing the failure needs to be improved. When you place your tests in quarantine you either expect them to fail and mark them as flaky(I wouldn’t recommend this approach, I will explain why in just a second), or you take those tests out of the test suite and place them in a separate suite where only flaky tests executed. Let’s see the pros and cons of both approaches.

Mark flaky but keep it in the suite – this will definitely remind you to address this test every time it fails which is plus. The problem with this approach is that you are building a habit to ignore this test and often it gets overlooked because it is known as flaky. Another issue with this approach is that your test suite loses credibility when it constantly shows flaky tests, people just going to stop trusting it all, and this is dangerous. I would really recommend avoiding this approach if possible

Move the flaky test to a separate suite – this is the approach I typically go for because your main regression test suite known as the test suite only contains healthy tests, if the test fails there is a reason for that. There is a con to this approach over time you can create a long list of tech debt, so make sure you address those issues as soon as possible. In my experience, I have had tests placed in quarantine and they would have caught critical bugs if it was addressed in time.

Cure

Timeouts. Cypress uses a variety of timeout strategies, the most common are: defaultCommandTimeout, and pageLoadTimeout. If you build your test infrastructure on CI you might also need some way of waiting for the build to finish before you run your tests, I typically use a node package called wait-on for this type of activity.

Self-curing approach for locators is when one strategy is failing you give a try to another strategy. So for example your main strategy is to use CSS attributes (data-test-id, id, class, etc.) and if this strategy fails for some reason you can try to do XPath, although it is not recommended to use XPath with Cypress. If you ever used Chrome devtools tests recorder, you can see an example of it when you export the tests.

Data seeding. Having data you can rely on is a crucial part of the stability of test infrastructure. So if your application under tests permits data seeding that is a defiantly the way to go. All you need is to set up all the necessary data before you run the tests (side don’t forget to tear it down once you are done with testing). Unfortunately, data seeding is not always available, especially with complex applications relying on many services, our next approach can help overcome that issue

Set the data over API. In this approach, we are setting up data while running the tests or using Before/After hooks. Remember even with e2e tests you want minimum dependencies on the things that are not related to a thing you trying to test, so instead of going a UI route which is slow and less reliable use API endpoints to arrange data for tests. This approach not only going to reduce flakiness but also will help you save money if the organization runs tests in cloud-provided CI (GitHub Actions, CircleCI)

Custom data attributes. This a common approach in the industry to add test data attributes to avoid flaky CSS attributes, typically it is something like data-test-id or qa-dataId

Viewport. Sometimes the tests fail because the viewport it is running is not the same as the one when you developing, here is how you change the viewport in Cypress

Retry failed. One of the great features modern frameworks have to offer is to retry failed tests. I typically enable this feature only in headless mode and keep it to a single run when developing locally.

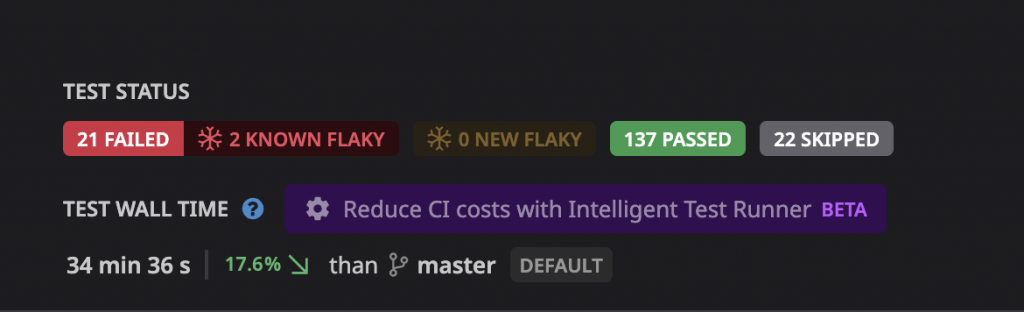

Catch earlier. In my case, the test results are integrated with the observability tool, and it provides info if the new flaky tests are introduced, the same observability tool shows what could be a reason for the tests to be flaky. Typically two reasons are either the component was updated or that is a brand new test that was not built with stability in mind.

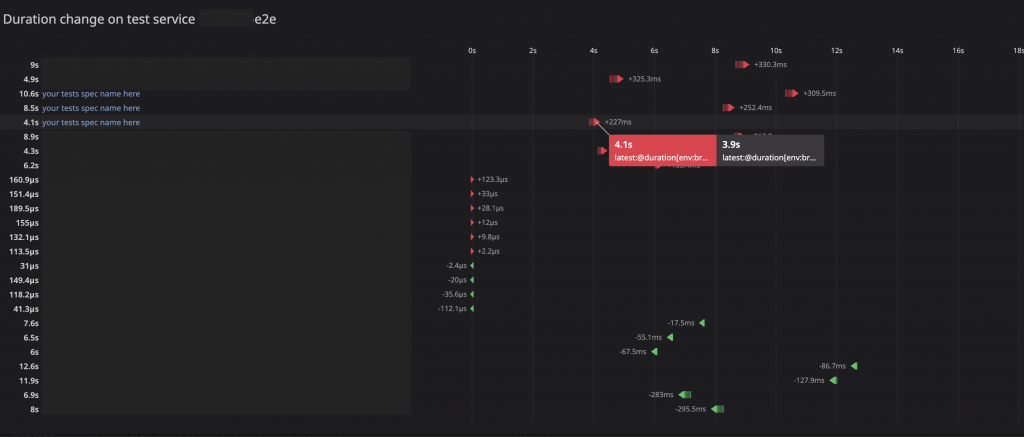

The other thing the observability tool can provide you is feedback on how long certain tests take to execute, it is not necessarily mean that the slow tests going to be flaky but it can prompt an issue, so watch out for the performance of the test carefully

Disable 3rd party requests. Analytics and tracking requests are very useful for collecting production-level data, but it is slowing down the e2e tests and creating extra dependencies that are not necessarily related to a source code you own. If you need to test that 3rd party integration write one test that does that but for the rest of the testing, there is no need for making those extra calls so I would highly recommend disabling it to reduce flakiness. Here is how you do it in Cypress:

blockHosts: [

'*maps.googleapis.com',

'*.hubspot.com',

'*.storage.googleapis.com'

]Feature flags strategy. This one is a little tricky there are two options, one is to assume that everything hidden behind the feature flag is not ready for e2e regression testing, in this case, your build for testing needs to build the app with feature flags disabled. The other option is to keep feature flags always enabled, in this case, you would have a separate directory for work-in-progress tests (similar to how I explained quarantine) and once the work is complete you move those tests to a main e2e directory.

Overall I’m trying to keep flaky tests at no more than 3% of the whole test suite, the reason for that is not to lose trust in your test infrastructure. Hope this blog post was useful feel free to post your questions/suggestions in the comments section below.